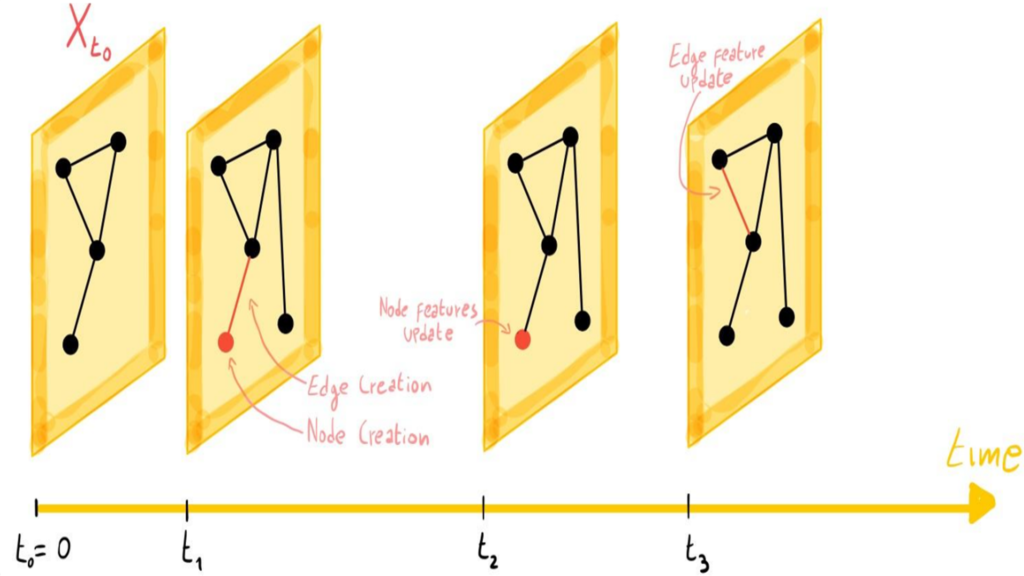

Temporal Graph Embedding

Temporal Graph Embedding is a technique that aims to represent nodes and edges in a dynamic graph within a low-dimensional vector space, capturing their temporal evolution. By learning meaningful representations, these embeddings enable various downstream tasks such as link prediction, anomaly detection, and community discovery in time-varying networks. These models effectively capture the evolving relationships between entities over time, providing valuable insights into dynamic systems like social networks, citation networks, and financial transactions.

Attention is all you need

“Attention Is All You Need” introduced the Transformer model architecture, which revolutionized natural language processing by demonstrating that complex tasks like machine translation can be performed effectively without recurrent or convolutional neural networks. The core innovation of the Transformer lies in the attention mechanism, which allows the model to weigh the importance of different parts of the input sequence when processing each position. The Transformer architecture has proven highly successful, enabling breakthroughs in various NLP tasks, including machine translation, text summarization, question answering, and more.