Explainable Spatio-Temporal GNNs

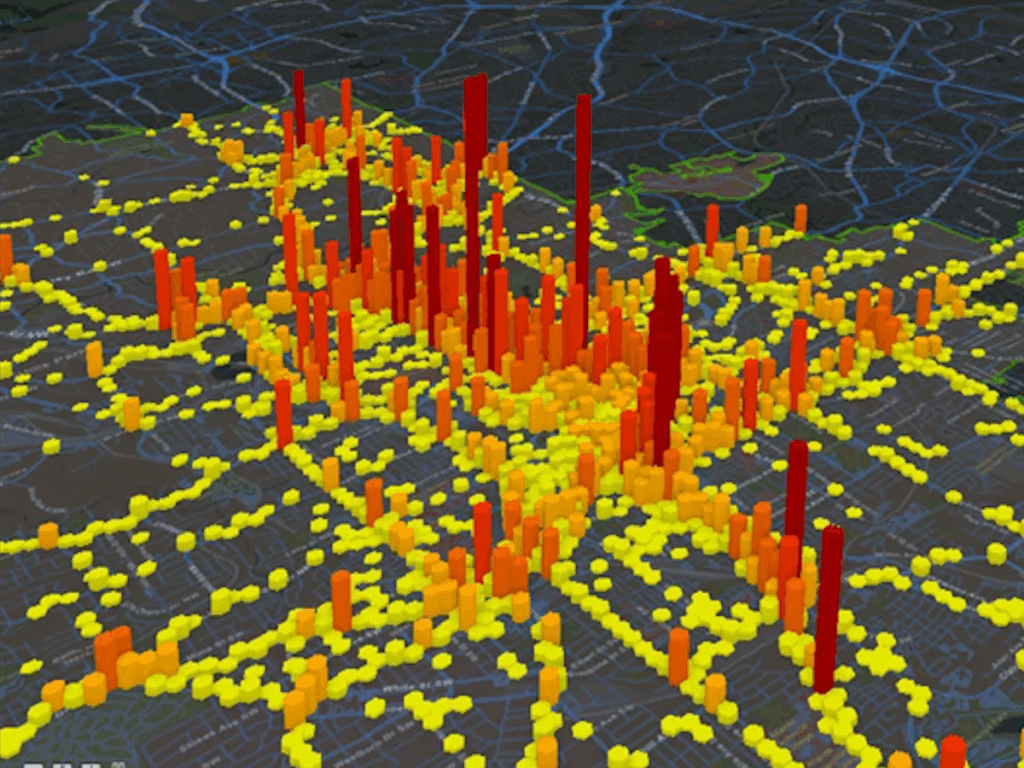

Spatio-temporal graph neural networks (STGNNs) have gained popularity as a powerful tool for effectively modeling spatio-temporal dependencies in diverse real-world urban applications, including intelligent transportation and public safety. However, the blackbox nature of STGNNs limits their interpretability, hindering their application in scenarios related to urban resource allocation and policy formulation. To bridge this gap, they propose an Explainable Spatio-Temporal Graph Neural Networks (STExplainer) framework that enhances STGNNs with inherent explainability, enabling them to provide accurate predictions and faithful explanations simultaneously.

Learning Model-Agnostic Counterfactual Explanations for Tabular data

“Learning Model-Agnostic Counterfactual Explanations for Tabular Data” focuses on developing methods to understand and explain the predictions of machine learning models applied to tabular data, such as those used in finance or healthcare. By generating “counterfactual” examples – hypothetical scenarios where minor changes to the input data lead to a different model prediction – these techniques aim to provide insights into the model’s decision-making process, enhance trust, and enable users to understand how to influence the model’s output. This approach is valuable as it is “model-agnostic,” meaning it can be applied to a wide range of machine learning models without requiring specific knowledge about their internal workings.