Efficient Question-Answering with Strategic Multi-Model Collaboration on Knowledge Graphs

This research explores a novel framework called EffiQA, which leverages the strengths of LLMs for high-level reasoning and planning while offloading computationally expensive KG exploration to a specialized, lightweight model, resulting in significantly improved efficiency and accuracy in knowledge-based question answering tasks.

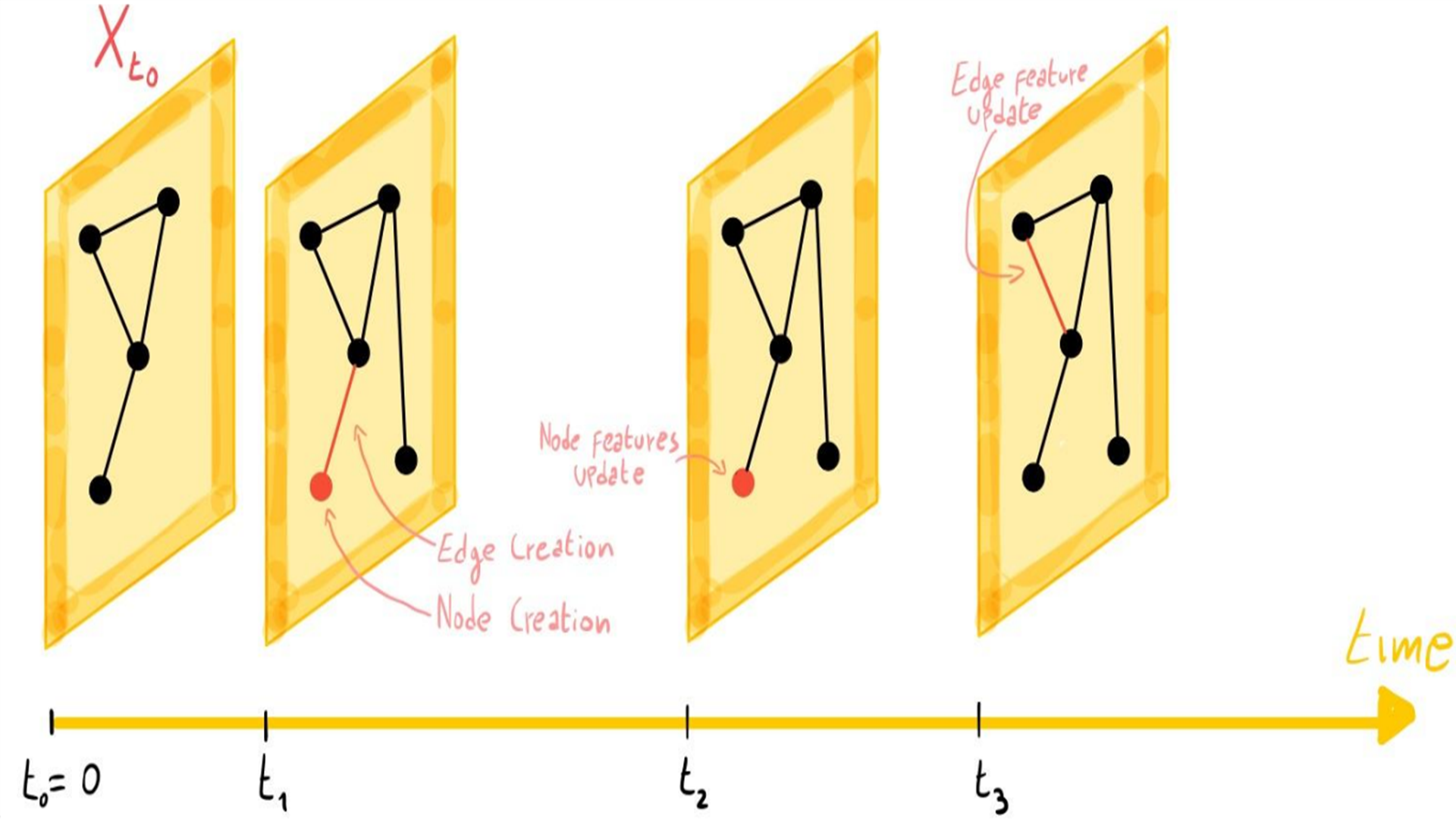

Sentence: EffiQA operates in an iterative manner, where the LLM initially guides the exploration process by identifying potential reasoning pathways within the KG.

Sentence: Subsequently, a specialized model efficiently prunes the search space by focusing on the most promising paths, ensuring that the exploration remains focused and avoids unnecessary computational overhead. This collaborative approach enables EffiQA to effectively navigate complex reasoning chains within KGs while maintaining high computational efficiency.